Have you ever consider what’s better: multiple smaller kafka topics, or one big topic that aggregates messages?

Kafka Consumer

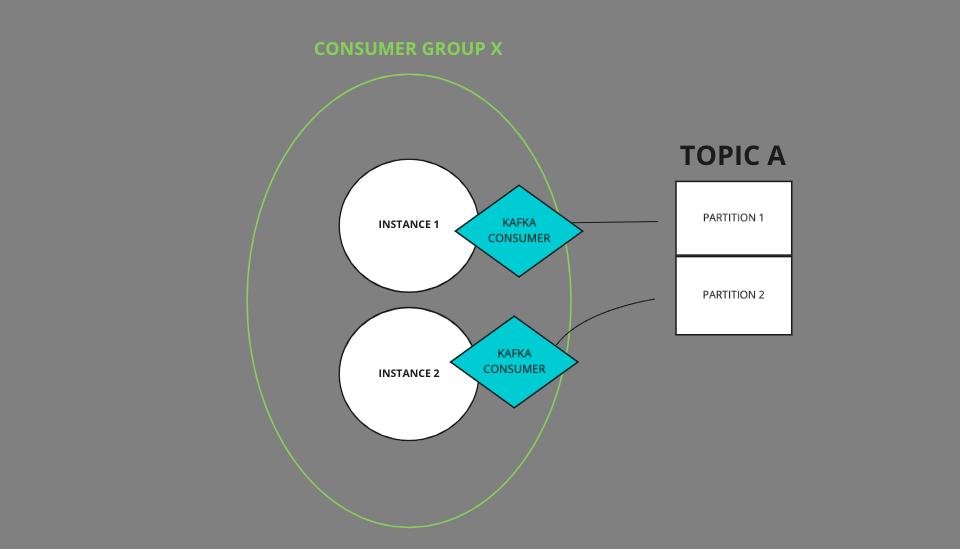

First things first .. we need to say a word about Kafka Consumer. The most important thing - you just MUST know is how topic works, and what’s a topic partitioning. In order to consume messages from kafka topic, you need to create a kafka consumer, and subscribe it into the topic. Every kafka consumer is grouped by consumer group name. It allows you to consume the whole topic using multiple physical instances of the application, and process distinct messages on each instance.

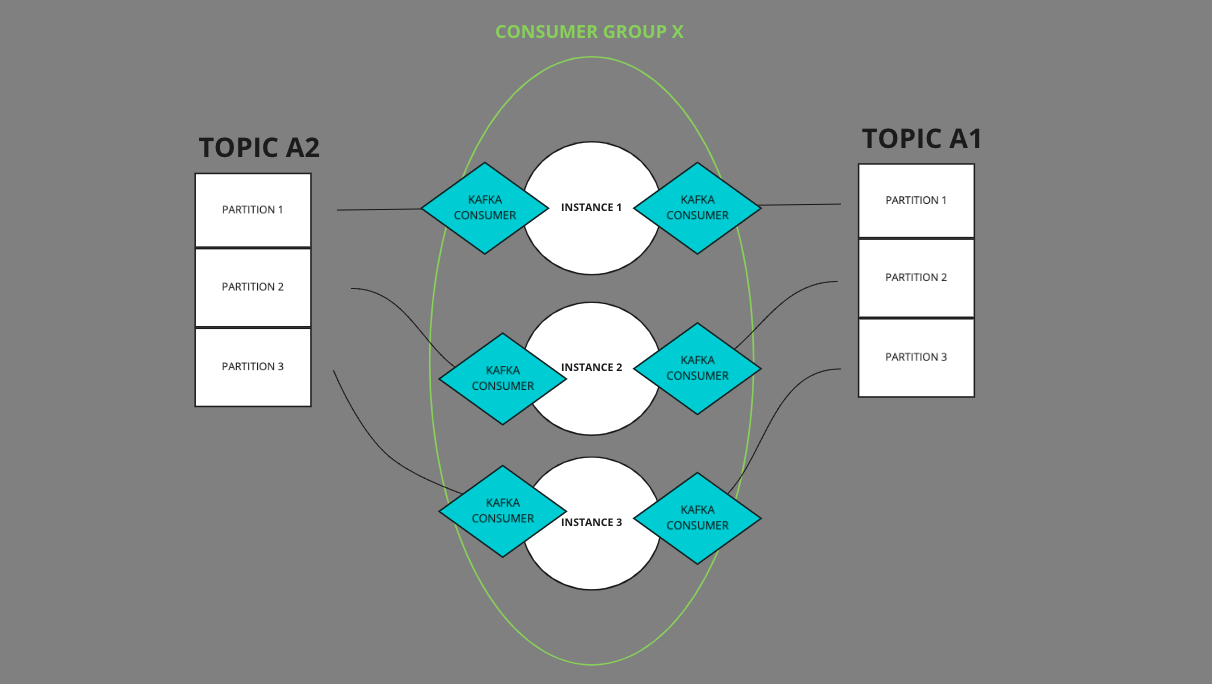

Let’s examine following chart:

What if you want optmise your kafka topic consuming now? What choices do we have? Let’s go through possible options, and then try to answear to the post question.

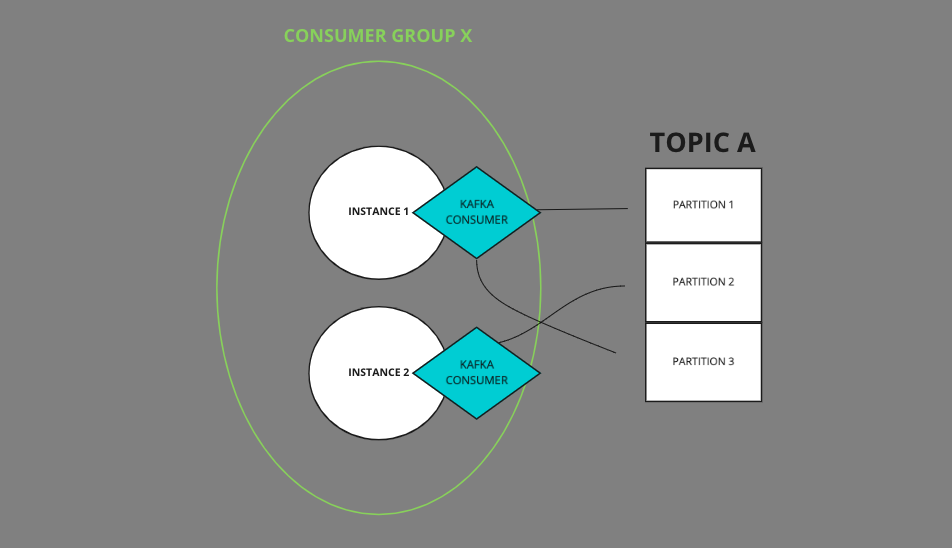

Try no. 1 - Add more partitions

Could we just add more partitions into the topic?

Yes we can, It’s a nice try. Not bad. What we got is that one of our existing consumer will consume two partitions instead of one. When we really think about it …. the previous solution was more optimize 😕 In this shape we are consuming messages from 2 partitions using one thread. In previous solution we were consuming one partition in one thread .. KafkaConsumer is not thread-safe, meaning that messages have to be poll in one thread, just look here KafkaConsumer.java

Comment form KafkaConsumer.java

* The Kafka consumer is NOT thread-safe. All network I/O happens in the thread of the application

* making the call. It is the responsibility of the user to ensure that multi-threaded access

* is properly synchronized. Un-synchronized access will result in {@link ConcurrentModificationException}.

Lesson 1 - adding more partitions make sense when we have more app instances than partitions

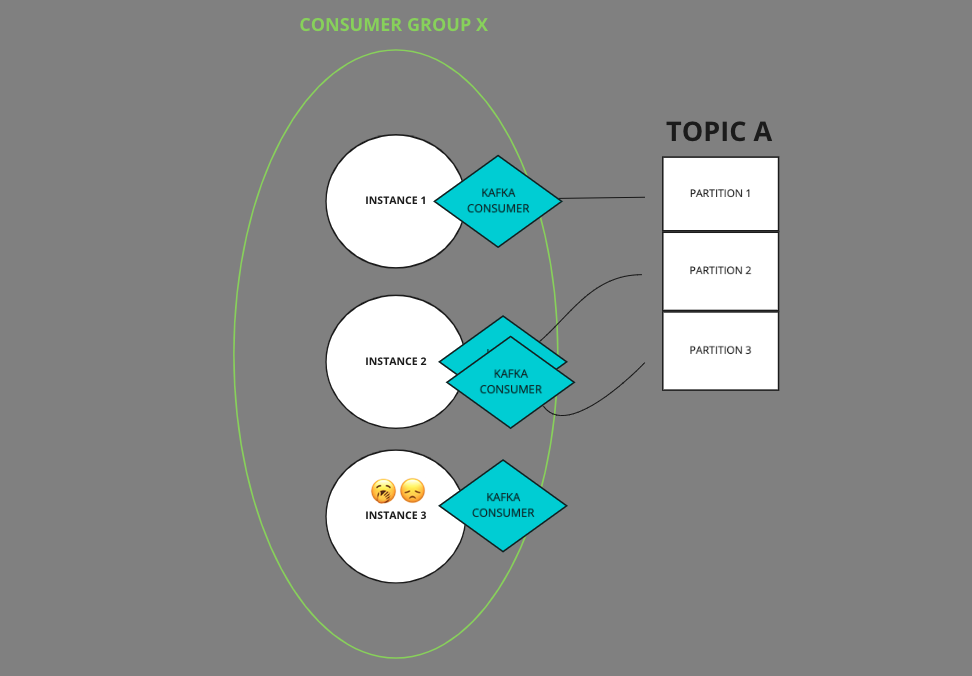

Try no. 2 - Add more instances

Could we just add more app instances/ more kafka consumers?

In our case … it does nothing 😅 In a case with two partitions, and three app instances .. one of the instances will not be assigned to any partitions.

Lesson 2 - more instances have no sense if we have fewer partitions then instances

Try no. 3 - create more kafka consumers for instance

Could we speed-up by creating more kafka consumers on each instance? It’s what @KafkaListener(concurrency = X) do in Spring.

It’s basically really similar situation to the previous one. One of our instances could be - in theory - fully assigned into 2 partitions. It’s leading us into situation when on of physical instances could be not assigned into any partitions.

Lesson 3 - kafka consumer concurrency make sense only if you have fewer partitions then app instances

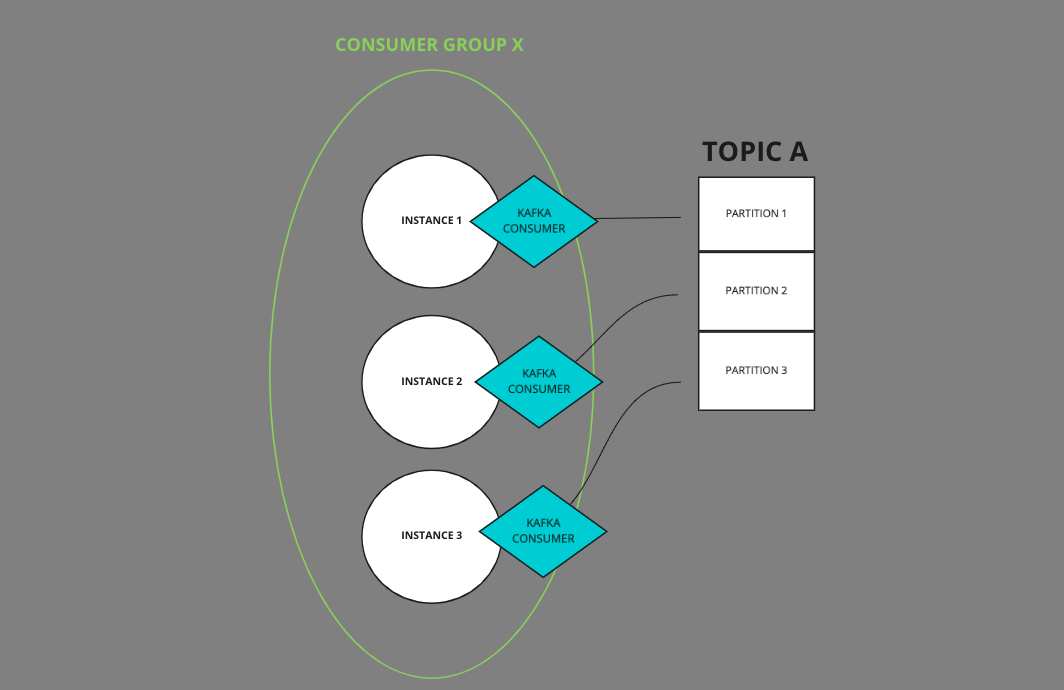

Try no. 4 - make partitions and app instances equal

What if we make number of partitions, and app instances equal?

It’s a most common, and most optimal case, when each physical app instance has one kafka consumer, and every consumer is assigned to one topic partition. The load is distributed evenly within all instances.

Lesson 4 - the most optimal setup is to have one partition for each instance. Then we are distributing our resources in a most optimized way.

Can we do something more? 🧐

Now we are getting into the point of this post. How can we optimize above consumers’ furniture? Adding more kafka consumers does make sense only when we use different consumer groups for each consumer set, but …. then we will process the same messages via multiple consumers which is not the best approach, right?

One of the possible optimization is to not use one huge topic for everything, but split it into smaller topics. It could be divided by kind, by genre, or any other discriminator that make sense in your case. In the end message will be sent to topic like: “topic.name.genre1” or “topic.name.genre2”.

Now we scaled-up our messages consuming. We can consume the same set of data by more than 1 kafka consumer per instance, and don’t worry about consuming the same messages via different kafka consumers.

Summary

Please remember that there is no one golden rule for any optimization. It always depends on a case, so you need to think. Analyze your situation, and depends on a case tweak a right knobs. Cheers 🍻